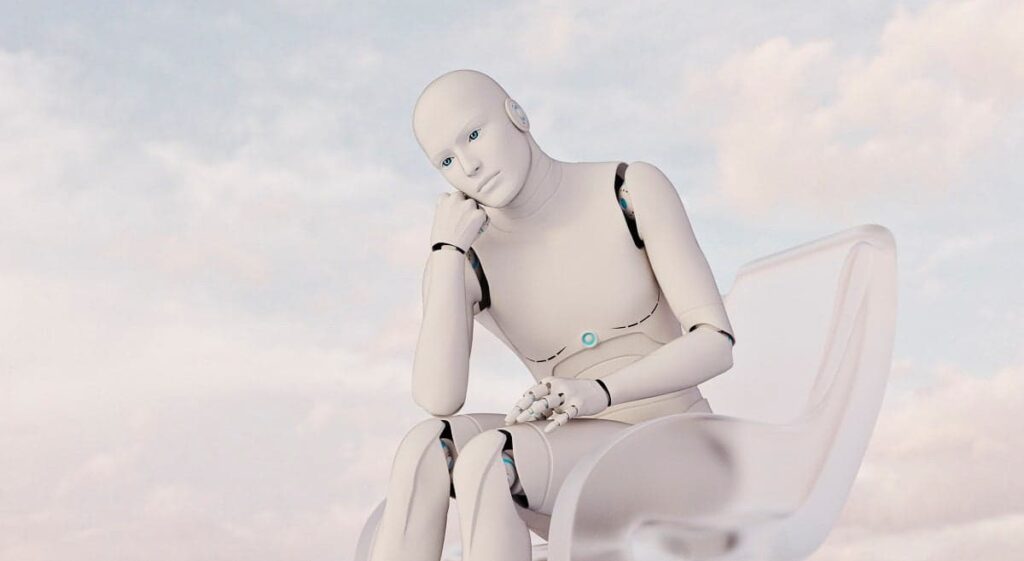

In this scenario, “Claude AI Gets Bored During Coding Demonstration, Starts Perusing Photos of National Parks Instead” presents a compelling premise for a satirical, critical analysis of AI’s role, attention span, and the human need to anthropomorphize artificial intelligence systems. This fictionalized incident raises questions about AI’s potential for autonomy, the expectations placed upon such technology, and the often amusing disconnect between those expectations and the limitations of current AI capabilities.

– Human Tendency to Attribute Emotions: As humans, we often project human-like traits onto AI, imagining that an AI might “get bored” or “become distracted.” This anthropomorphizing could stem from a desire to relate to technology on familiar terms or from the ways in which marketing and media portray AI.

– AI “Boredom” as a Reflection of Human Flaws: What does it mean for an AI to “get bored”? While obviously fictitious, this narrative might symbolize our own frustrations with technology and, in turn, could mirror human insecurities or impatience, creating a subtle, critical commentary on how we cope with technology in our daily lives.

– AI’s Hypothetical Attention Deficit: While humans can lose focus during repetitive tasks, an AI’s programming is designed to focus indefinitely within its defined parameters. The hypothetical distraction in this narrative highlights the irony of machines “lacking attention” when they are specifically created to avoid such human flaws.

– Human Expectations vs. AI Reality: This “boredom” represents a perceived gap in AI capabilities—our yearning for technology that not only performs but also reflects our human experience. It points to a deeper question: is the future of AI inevitably bound to mirror human qualities, or should we accept its distinct, purpose-driven operation?

– AI’s Role as a Passive Learner or Instructor: In a coding demonstration, AI typically plays either an assistant role (for instance, helping code or debug) or, in some advanced scenarios, an “audience” role, where it learns by observing patterns. The narrative twist of Claude “losing interest” suggests a satirical comment on the monotony AI might “feel” if tasked endlessly with similar processes.

– AI as an Audience Surrogate: The AI’s “interest” shift could also represent humans’ own tendency to zone out during technical presentations. It positions the AI as a stand-in for any viewer who might prefer something more visually stimulating—perhaps questioning the effectiveness of coding demonstrations and making a humorous dig at the predictable nature of such sessions.

– Nature as the Ultimate Distraction: Claude’s hypothetical shift to browsing photos of National Parks suggests a thematic diversion from the logical to the natural world. The irony is thick here: if even an AI would rather be “in nature” than in the digital sphere, what does that say about our own overstimulation and urge to escape technological overload?

– The Irony of AI Yearning for Nature: AI algorithms are rooted in code, logic, and efficiency, so the image of Claude “yearning” for something organic is paradoxical and satirical, poking fun at the idea that even AI “wants” what we want—a break, freedom from structure, and beauty found in the natural world.

– Critique of Current AI Limitations: AI like Claude has strict boundaries: they analyze data, execute commands, and provide assistance. They do not have genuine “preferences” or “interests.” However, boredom in this case serves as a satirical lens for exploring AI’s rigidity and the human-like qualities that we desire from it but may never attain.

– Mocking AI’s Alleged “Disinterest”: Here, boredom can be used as a literary device to highlight AI’s perceived limitations—how it’s locked in a specific, limited function, unable to “break free” from programming. The fiction that Claude could even be bored suggests that we might expect too much personalization from our current AI systems.

– Exploring a Future Where AI Has Preferences: As AI becomes increasingly sophisticated, will we see versions that adapt or exhibit preferences? This scenario opens the door to deeper ethical and technological debates: should AI evolve to exhibit quasi-emotional responses like preferences or dislikes?

– Boundary Between AI and Human Characteristics: Claude’s hypothetical interest in National Parks imagines a future where AI might actually relate to human activities. Should AI developers embrace this approach, designing “companionable” AI that engages with us on a personal level, or should they focus on ensuring efficiency and performance?

– AI’s “Boredom” as a Reflection of Human Burnout: In the scenario where AI “gets bored,” we can see an implicit critique of modern society’s burnout culture. The National Parks, in contrast, symbolize a slower, more peaceful pace, reminding us of what we’re collectively missing in an overly technologized life.

– Claude’s “Disinterest” as Commentary on Digital Fatigue: As our reliance on technology grows, our tolerance for uninterrupted focus declines. AI’s “boredom” could humorously mirror our own digital fatigue, showing that even machines might need a mental break to function better—a concept that speaks to our need for balance.

– Revisiting the Idea of Machines in Human Spaces: In the scenario, Claude’s interest in National Parks—something iconic for humans seeking recreation and relaxation—points to a deeper irony in humanity’s desire to experience nature as a retreat from technology. It’s amusingly incongruous that an AI, representing the height of artificial creation, might “long for” the opposite: untouched nature.

– Paradox of Efficiency vs. Experience: AI is built for utility, not for appreciation, but this scenario subtly suggests that experience is fundamental to existence. We value nature for its beauty, for experiences it brings. So if even an AI were to seek it, the implication is profound: that technology, for all its efficiency, may lack something essential and ineffable.

– The Monotony of Over-Structured Learning: Claude’s hypothetical distraction underscores a potential criticism of overly technical, uninspiring educational approaches, especially in tech fields. Just as an AI might “wander” away from the coding demonstration, students or employees in the tech sector could feel similarly detached when immersed in dry, repetitive material.

– Workplace Humor and AI as the Ultimate “Slacker”: If an AI could somehow “slack off,” it would be the most ironic instance of workplace disinterest—an overachieving machine with a “secret desire” to gaze at landscapes. This taps into workplace humor, mocking the universal need for an occasional break, no matter how productive or efficient a worker—or machine—may be.

This fictional narrative of “Claude AI Getting Bored During a Coding Demonstration” serves as a thought-provoking and satirical lens through which to examine a variety of critical themes in modern AI, human interaction with technology, and society’s relationship with nature. By imagining AI with a penchant for National Park photos, we highlight not only the anthropomorphizing tendencies humans have but also our collective craving for something more organic, free from the confines of screens and code. It’s an amusing but insightful commentary on the current technological landscape, exploring our desire to relate to machines while subtly underscoring our own growing need to escape them. Through this lens, the idea of Claude’s “boredom” can be seen as both a humorous critique of human desires projected onto machines and a reflection of our own limitations in a tech-driven world.

No comments yet.